Opinion: has Google’s ‘breakthrough’ AI LaMDA developed its own sentience?

Image credit: Lena Vargas

The verdict is out and opinions are mixed about this latest news.

Over the past few weeks, Google has been a constant feature in the news due to one of their engineers, Blake Lemoine, who has been claiming (and who the headlines would have you believe) that their Google AI program, LaMDA, has become sentient and attained a human-level intelligence.

Google has denied Mr Lemoine's suggestion and since dismissed Lemoine, stating that he chose to “persistently violate clear employment and data security policies that include the need to safeguard product information.”

Nevertheless, the impact of his comments remains. As you’d expect, this news has generated a lot of controversy in the tech community as professionals and laymen alike have come down on either side of the issue, with some dismissing the claim as nothing more than an attempt by the engineer to get his 15 minutes of fame, while others have argued that LaMDA should be put in the care of the state and sent off to a good school.

Okay, nobody has actually said that last part – yet. But opinions are running hot, that’s for sure.

Before we offer you our take on the situation, however, let’s start at the beginning and look at the facts.

What exactly is LaMDA?

LaMDA (or Language Models for Dialogue Applications) is, in the words of Brian Christian – the author of this article in The Atlantic that looks at the LaMDA problem – “a kind of autocomplete on steroids.” As part of its training, Google engineers fed LaMDA trillions of words of dialogue in order to improve its ability to predict the next words in a sequence.

LaMDA was built on Google’s Transformer neural network, and according to Google, it:

“Produces a model that can be trained to read many words (a sentence or paragraph, for example), pay attention to how those words relate to one another and then predict what words it thinks will come next.”

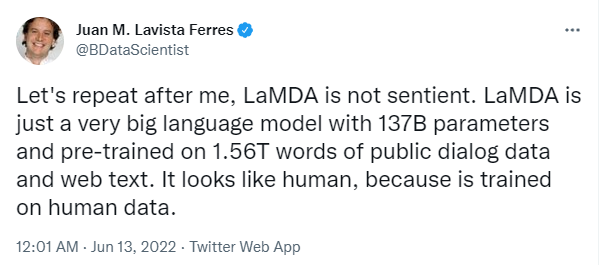

In essence, LaMDA is an AI that was trained to understand how humans speak, using human data, in order to produce a response that would appear human. What Blake Lemoine, the engineer who made the claim for LaMDA’s sentience argues, is that beyond just appearing human, the AI effectively is human.

However, many people are arguing against this.

So, what does this all mean?

The issue of what to do when an AI becomes sentient has consumed humans since well before Skynet appeared in cinemas back in the 80ies threatening hellfire and destruction, and it raises some interesting questions.

Even if their programs aren’t yet sentient, and may never be, should companies like Google be allowed to build AIs as powerful as LaMDA free from regulation and oversight? LaMDA itself may have a fairly innocuous application but how about when someone develops an AI to do more than predict search queries? As we should know by now, trusting big tech companies to reign themselves in and act in a safe and responsible manner is perhaps not the best approach when we’re dealing with technologies that could upend our entire existence.

But we’re avoiding the question: is LaMDA alive?

You could ask a dozen different people the same question and you probably wouldn’t get a unified answer, but that doesn’t always mean that it’s up for debate.

Unfortunately, headlines like this tend to surface every few months with some outlandish claim about the state of AI and are usually either attempting to prey on the public’s fears or get someone their 15 minutes of fame. While there is no doubt that AIs such as LaMDA are incredibly powerful tools, they remain incredibly limited.

We decided to ask a few members of the White Box team and some of our friends in the industry to see what they thought of the news.

Louis Keating (White Box Founder)

“These stories come along every so often and attract a lot of attention (as clickbait) that the world is going to end with the start of Skynet (from Terminator) but I’m always reassured by two quotes I’ve heard over the years:

The first was at a conference I once attended, where a professor provided a great synopsis of how far AI will progress: “the human brain is extraordinarily complex. A monkey’s brain is far less complex but still significantly more so than any computing system and AI because the AI is based on programming models.”

The second is from tech author and commentator Benedict Evans, who once said “Machine learning is like having infinite interns.” In other words, they are great if you can point them in the right direction and give them a task, but otherwise, they’re more or less useless.

Here at White Box, we work with data 100% of the time and have built many models over the years, so we are very familiar with how modelling works. Although LaMDA is definitely a great achievement, it is based on a model; a framework for what it needs to do. Much like Deep Blue the master chess AI, it is fantastic at what it does but if you gave it a different problem, it wouldn’t be able to cope.”

Diwakar Bhrugubanda (White Box Data Scientist)

“When a human-like AI solution is given a right to generate opinions and legal protection, it is not too far from saying that it may lead people into complex situations as, in general, punishments/rewards do not affect machines directly but do have an impact on humans. This raises a lot of ethical questions, namely, should companies be allowed to build ambiguous AI products like LaMDA at this scale without a regulatory body to validate its true boundaries?”

What do you do if it turns out that an AI actually is sentient? How does that development affect our understanding of what we consider a ‘person’ to be, not only ethically but legally as well? If an AI somehow commits a crime or harms others, traditional punishments such as prison don’t really work – so do you turn it off? Is that then different to the death sentence?

Anthony Tockar (Data Science Lead at Verge Labs)

“These headlines are clearly designed for clickbait. There is no reason whatsoever to suspect sentience from these models.

Can AI be sentient? I don’t see why not, but the current deep learning-powered mega models are nothing but pattern matchers, albeit very good ones.

We are very far away from any kind of general or human-level AI and these kinds of stories hurt the field by generating unrealistic expectations or fears among the public.”

What’s the final verdict - is LaMDA alive?

At this stage, we’re sticking with a very firm no.

Although we continue to be impressed by LaMDA and many of the other AIs that emerge each year, we’re still a long way off seeing anything that could be fairly described as sentient. Could it happen? Absolutely, but it hasn’t yet. Far from being a person trapped inside a machine, LaMDA is just a program that knew what to say to get people to pay attention – and we did.

Want to delve more into this world? Let’s chat.

White Box has helped numerous clients solve data problems, including those that fall onto the Machine Learning and AI side of the fence. If you want to discuss how you can make more from your data, get in touch.